videocr

Extract hardcoded subtitles from videos using the Tesseract OCR engine with Python.

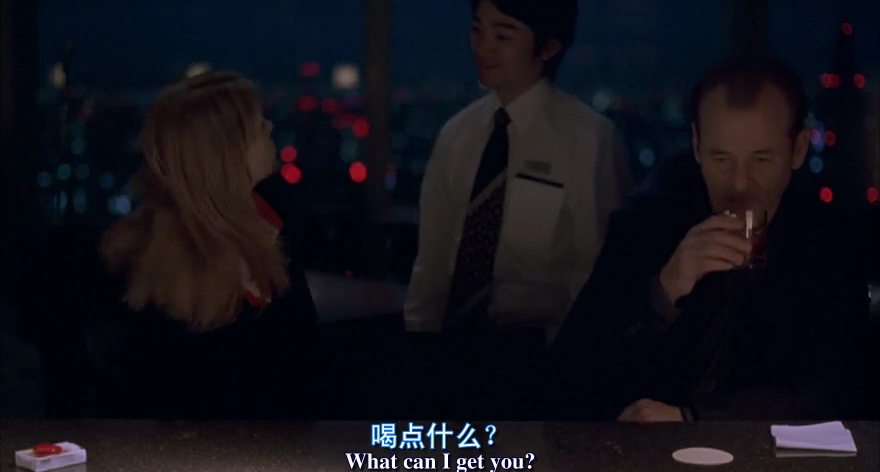

Input a video with hardcoded subtitles:

import videocr

print(videocr.get_subtitles('video.avi', lang='chi_sim+eng', sim_threshold=70))

Output:

0

00:00:01,042 --> 00:00:02,877

喝 点 什么 ?

What can I get you?

1

00:00:03,044 --> 00:00:05,463

我 不 知道

Um, I'm not sure.

2

00:00:08,091 --> 00:00:10,635

休闲 时 光 …

For relaxing times, make it...

3

00:00:10,677 --> 00:00:12,595

三 得 利 时 光

Bartender, Bob Suntory time.

4

00:00:14,472 --> 00:00:17,142

我 要 一 杯 伏特 加

Un, I'll have a vodka tonic.

5

00:00:18,059 --> 00:00:19,019

谢谢

Laughs Thanks.

Performance

The OCR process runs in parallel and is CPU intensive. It takes 3 minutes on my dual-core laptop to extract a 20 seconds video. You may want more cores for longer videos.

API

videocr.get_subtitles(

video_path: str, lang='eng', time_start='0:00', time_end='',

conf_threshold=65, sim_threshold=90, use_fullframe=False)

Return the subtitles string in SRT format.

videocr.save_subtitles_to_file(

video_path: str, file_path='subtitle.srt', lang='eng', time_start='0:00',

time_end='', conf_threshold=65, sim_threshold=90, use_fullframe=False)

Write subtitles to file_path. If the file does not exist, it will be created automatically.

Parameters

-

langThe language of the subtitles in the video. All language codes on this page (e.g.

'eng'for English) and all script names in this repository (e.g.'HanS'for simplified Chinese) are supported.Note that you can use more than one language. For example,

'hin+eng'means using Hindi and English together for recognition. More details are available in the Tesseract documentation.Language data files will be automatically downloaded to your

$HOME/tessdatadirectory when necessary. You can read more about Tesseract language data files on their wiki page. -

time_startandtime_endExtract subtitles from only a part of the video. The subtitle timestamps are still calculated according to the full video length.

-

conf_thresholdConfidence threshold for word predictions. Words with lower confidence than this threshold are discarded. The default value is fine for most cases.

Make it closer to 0 if you get too few words from the predictions, or make it closer to 100 if you get too many excess words.

-

sim_thresholdSimilarity threshold for subtitle lines. Neighbouring subtitles with larger Levenshtein ratios than this threshold will be merged together. The default value is fine for most cases.

Make it closer to 0 if you get too many duplicated subtitle lines, or make it closer to 100 if you get too few subtitle lines.

-

use_fullframeBy default, only the bottom half of each frame is used for OCR. You can explicitly use the full frame if your subtitles are not within the bottom half of each frame.